The season finale of NBC’s The Voice featured a joint performance of "My Universe" between Coldplay and South Korean boy band BTS, but with a twist—BTS were represented with 3D virtual avatars.

The two bands played together in mixed reality, with Coldplay performing in person and the BTS band members created via volumetric capture and rendered live using Unreal Engine 4.27.1.

Planning for the AR project began in August between Coldplay Creative, Dimension Studio, the music video studio which shot footage of the BTS band members for the original video, and All Of It Now (AOIN), a virtual production agency. The Voice opportunity was selected by Coldplay because of the experienced production team and the studio environment and infrastructure.

The volumetric capture assets of BTS with holographic treatment were provided by Dimension Studio, in two formats, an Alembic sequence, and a special mp4 export using Microsoft’s SVF Plugin for Unreal Engine 4.27.1. Due to the high data rate of playing back 7 Alembic sequences simultaneously, the mp4 approach was selected instead as the preferred method, and both AOIN and Dimension teams moved forward with creative effects and sequencing.

Live Design talked to All Of It Now about the main challenges of the project.

Live Design: The effect has been described as a hologram, but it was not visible on stage to Coldplay or the audience during the Voice, only the people at home.

All Of It Now: Correct, Coldplay were not able to see the AR effect while performing, but we worked with (LD) Sooner Routhier to use specific floor lights on stage to help Chris Martin identify which AR performers were on stage, and when. We made heavy use of previsualization to give the Coldplay team visibility into the creative sequencing behind each moment, as well as show the Voice director what types of shots worked well, and what to avoid.

LD: If Coldplay wanted to recreate this event during the upcoming tour, for example during a livestream of one of the dates, how difficult would it be to accomplish? Is there a plug and play version of this once you have the BTS tape?

AOIN: The great thing about volumetric video is that it does make this content more portable across more shows, and we have had prior experience with touring AR. We were one of the first companies to tour an AR effect for BTS’ 2019 Love Yourself, Speak Yourself tour

The most difficult part of touring an AR effect is the AR camera calibration process, which can be time consuming, and not for the faint of heart, but totally possible with the right amount of planning.

LD: Is there a way for live audience members to see this? Presumably if the livestream was played back on IMAG (although what kind of latency would there be on that?) but is there a way to present actual holograms on stage for a live audience?

AOIN: We ran into the live audience question a lot on the 2019 BTS tour, and the conversation we have about it is generally about how prominent a role the IMAG screens play in stadium level shows, and how the vast majority of the viewing audience end up watching the performed on the IMAG screens, due to the distance between their seats and the actual performance. This also applies for broadcast as well, and for the live audience experience with the Voice, we worked with production on “coaching the audience” to respond to the key AR moments as if they were really on stage.

Latency between the standard IMAG and the AR feed is typically 5-7 frames. This latency is generally acceptable in stadium shows, as the delay of the PA to the back of the stadium can often match the additional latency created by this AR workflow. For this show, as it was broadcast, we were able to delay the standard camera feeds to match the latency of the AR feeds, which made cutting between AR and non-AR cameras much easier for the director.

That said, this is a conversation we have with creative and technical parties early on in the process, to make sure that we’re aligned on expectations, and have strategies to solve for this added latency.

LD: I saw that one of the challenges AOIN overcame was a difference in the taped song length and the length of the version used on The Voice. Is there a way to edit this on the fly?

AOIN: So in order to solve this difference between the music video arrangement and the broadcast arrangement, we ended up using an old content playback technique where we splice the timecode coming into our volumetric video playback rig. Because we’re still dealing with linear video and sequences, we were able to have band members “glitch” off screen, jump the timecode, and glitch them back on, so that we were able to match the performance to the updated arrangement. We’d want to avoid having content visible during that jump, and so we worked with the Coldplay team on finding the best moment to transition the performers off the stage, so that we could advance without it being noticeable.

Challenges

As with any groundbreaking innovation there were some technical challenges to overcome with the mp4 approach. The current SVF plugin for UE4 did not have the ability to track the mp4 recordings properly to timecode. This required collaboration between AOIN and Microsoft to rewrite elements of the SVF plugin code so that the BTS performers remained in sync with Coldplay on stage.

Another challenge with using in the original volumetric capture recordings was that the original BTS performances were recorded at 24 FPS to match the music video frame rate, but the Voice is produced and broadcast at 29.97 FPS. This created some lip sync issues blending 24 FPS into a 29.97 output, but AOIN was able to clean up the lip sync issues in post production.

The Creative Process

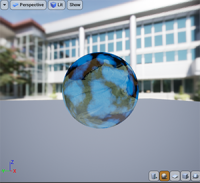

The creative pipeline involved reviews and discussions with both the Coldplay and Dimension teams. Some visual effects were created by the Dimension team, and then some effects created by the AOIN team, with all efforts merged using Gitlab as the version control platform. Due to the nature of performer mesh textures, the mp4 format created issues with some initial approaches to the hologram VFX, so the hologram glitch and on/off transition effects were refined by the AOIN technical team, with creative sequencing done inside the UE4 sequencer.

The AOIN team received 3D stage assets from The Voice Team, and were able to put the performers inside of a 3D representation of the Voice Stage. This process enabled a crucial round of Previsualization, where the Coldplay creative team could test AR performer configurations, tracking area, transition timing, and camera blocking before even stepping foot inside the studio. AOIN was able to ingest the 3D stage assets and production camera plot into Unreal, creating a real-world scale accurate representation of the 7 AR cameras, and the performers, so that the Coldplay creative team could visualize which performers would be visible for each moments, which cameras could best capture these moments, and so previsualization renders became the best method to communicate these moments to all parties involved. This previsualization time was crucial in making the best use of the limited time onsite, and also helped unify all technical and creative efforts into a shared deliverable with shared understanding.

On Set Deployment/Challenges

In addition to producing this performance, The Voice team was actively producing the rest of the season. This meant scheduling and coordinating all aspects of this performance around the existing performance and production schedule of The Voice. The installation of this AR system relied on a few different installation phases: installing tracking stickers in the studio for the camera tracking system, mapping the tracking area, calibrating all 7 camera lenses for AR applications, rehearsing and camera blocking the performance with the Voice team, and then finally rehearsing the AR performance with Coldplay on stage, all taking place in 7 days. Deploying and calibrating 7 AR cameras while in the midst of a fast paced TV production schedule was no easy task, but with the help and support of The Voice production team, All of it All of it Now was able to coordinate the calibration and installation of 7 tracked cameras inside the studio around the existing production, without significant disruptions to the existing schedule.

“EIC Jerry Kaman’s support and supervision throughout the process was crucial to the success of this project”, said Danny Firpo, AOIN CEO. “This installation process is typically where most projects go wrong, but Jerry’s knowledge and experience of the Voice’s Production schedule, and suggestion to bring in additional cameras is what made this process so successful.” AOIN worked with Stype and their Red Spy Fiber camera tracking system, and Augmented 2 Jib cameras already used by the production, and then supplemented that camera package with 5 dedicated AR cameras, which allowed for calibration of the tracked cameras even while the Voice was shooting another segment.

Credits

Coldplay Creative Team

- Coldplay Creative Director - Phil Harvey

- Production Designer & Co-creative director - Misty Buckley

- Head of Visual Content - Sam Seager

- Lighting Designer - Sooner Routhier

- Project Manager Creative - Grant Draper

- Screens Director - Joshua Koffman

- Video Designer - Leo Flint

- Screen Content created by NorthHouse Creative

AOIN On Site Team

- Executive Producer - Danny Firpo

- Production Manager - Nicole Plaza

- Technical Director - Berto Mora

- Senior UE4 Technical Artist - Jeffrey Hepburn

- Senior AR Engineer - Neil Carman

- AR Engineer - Preston Altree

Volumetric Capture Studios: Dimension, London

- Executive Producer/Co-Managing Director: Simon Windsor

- Sales/ Client Director: Yush Kalia

- Head of Production : Adam Smith

- Producer/Technical Director: Sarah Pearn

- Senior Lead Volumetric Technical Artist: Adrianna Polcyn

- Animator: Ben Crowe

- CG Lighting Artist: Marcella Holmes

Jump Studio (SK telecom), Seoul

- VP, Head of Metaverse Company: Jinsoo Jeon

- VP, Head of Metaverse Development: khwan Cho

- Executive Producer: Sung Yoon Baek

- Business Development: Taekeun Yoon

- Producer: Minhyuk Che

- Stage Supervisor: Gukchan Lim

The Voice Team

- Executive Producer - Amanda Zucker

- EIC - Jerry Kaman Director - Alan Carter

- Supervising Editor - Robert M. Malachowski, Jr.,

- ACE Post Production Supervisor, Associate Director - Jim Sterling

- Assistant Editor - Joe Kaczorowski